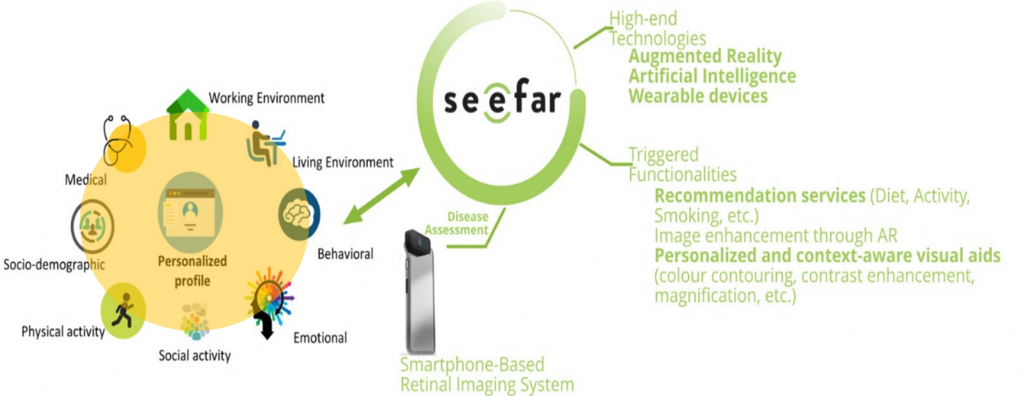

One of the innovative features of the See Far solution is that the provided suggestions are adaptive to the profile of the user. This is achieved through the Personalized visual recommendation service.

The Personalized profile of the user consists of the socio-demographic profile (e.g. age, occupation, marital status, etc.), the medical profile of the user (type of vision deficiency, severity of its vision deficiency, comorbidities, etc.), the physical activity profile, the social activity profile, the behavioural profile of the user in the working and living environment and the user’s cognitive and emotional profile.

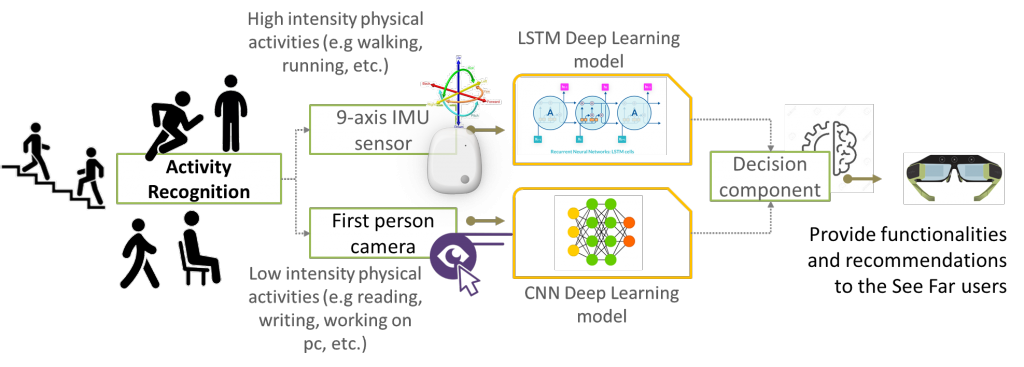

The classification of the user’s daily activities, determining the user’s behavioural profile in the living and working environment, is based on data from a first-person camera and an IMU sensor data mounted on the smart glasses.

A Long-Short-Memory (LSTM) model has been built for recognizing locomotive activities (i.e. walking, sitting, standing, going upstairs, going downstairs) from acceleration data, while a ResNet model is employed for the recognition of stationary activities (i.e. eating, reading, writing, watching TV working on PC). The outcome of the two models is fused for the final decision, regarding the performed activity, to be made and the corresponding functionality to be triggered.

In parallel, the low-level eye-related motions are tracked by the embedded eye-tracking system. These are subsequently utilized for implementing advanced AI-based algorithms for estimating the user’s emotional arousal and its cognitive workload. These variables determine the user’s cognitive and emotional profile and are used in personalizing the user’s experience in wearing the See Far smart glasses.